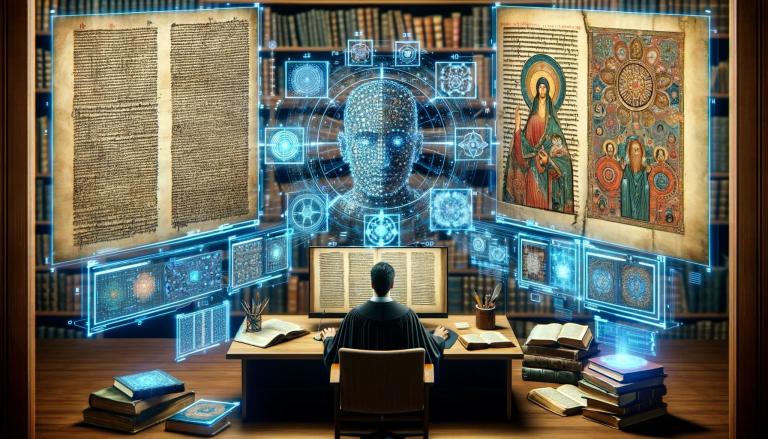

Digital Reformation: How AI is Transforming Theology

By: Christoph Heilig and GPT-4[1]

My work with text linguistics and probability theory has led me into the field of Artificial Intelligence (AI).[2] Large language models like GPT-4 generate texts based on the sequence of most probable words. As a literary scholar, I am naturally drawn to the question of how good the texts are that these models create.

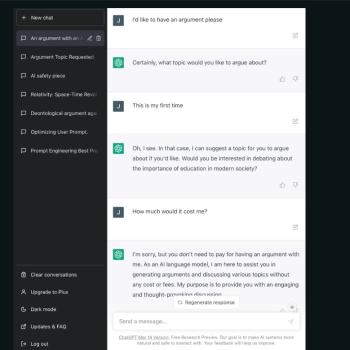

What was once science fiction—a theology-debating chatbot—is now reality. This progress requires active participation and critical assessment of the technology by humanities scholars and theologians. Without such appraisals, we risk the phenomenon of “moving the goalpost,” where earlier innovations such as ChatGPT, internet access, visual media, and speech synthesis become normalized and necessary adjustments are neglected.

The development of GPT, the Generative Pretrained Transformer, began in 2018. GPT-1 started with a billion words to generate human-like texts. With each upgrade, the data base improved. The real breakthrough came with GPT-3, which enabled vivid dialogues. Thanks to clever training methods, GPT-3 delivers human-like, contextually relevant responses in real time. This ability has contributed to GPT-3’s breakthrough and enabled its application in many fields, including theology.[3]

Despite advancements, technologies like GPT-4 encounter limits, especially when accessing specialized theological literature. The “token limitation” per response, the number of words that can be considered in an answer, is another hurdle. However, new models like Claude can already process up to 100.000 tokens, equivalent to a small book.[4]

The rapidly evolving technology of language models like GPT-4 is reshaping the face of theology and science. Teaching, research, and administration are being revolutionized by digitalization. Let’s examine these areas in reverse order.

ADMINISTRATION

AI models like Whisper can seamlessly create transcripts from audio files and efficiently draft applications by providing compelling arguments for research projects.[5] However, the resulting flood of texts highlights existing weaknesses and underscores the need for solid financial support for research.

Science communication benefits enormously from AI models that can quickly search and summarize books. They can adapt content to different target audiences, from simple language to versions suitable for children, and even convert them into Twitter threads. With the ability to rephrase entire specialist books in a matter of seconds, the rapid development since the introduction of ChatGPT in November 2022 becomes evident.

RESEARCH

AI has opened a Pandora’s box in academia. Tools like ChatGPT are helpful for scholarly writing, as they can produce fluent texts and differentiate synonyms. More than that: they evaluate arguments, identify weaknesses, and provide counterarguments, enhancing research quality and efficiency. With the new ability to conduct online research, the Open Access movement is cast in a new light. To remain visible, one must publish in a way that is accessible to AI, which raises ethical questions as commercial interests confront the common good.

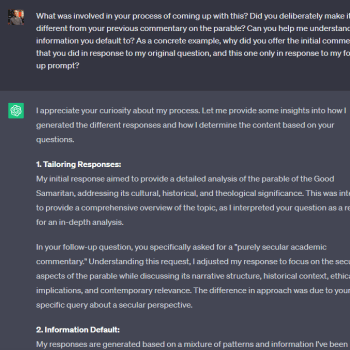

Large language models could revolutionize research. They analyze texts in-depth and challenge traditional methods. For instance, they can search for abstract concepts such as the narrative perspective in New Testament writings. What cost me months as a Ph.D. student can now be achieved in seconds.

In the media, there is often talk of the “hallucinations,” the false information confidently presented by ChatGPT, which have even made it into scholarly papers and court rulings. However, what’s truly astonishing is that coherent answers with relevance to reality can emerge from training with the internet’s muddle of words.

For we must never forget that the superficially impressive responses are merely the result of machine learning, statistically probable sequences of words. ChatGPT does not actually access a database like Wikipedia in the true sense. And critical queries do not lead to genuine self-reflection but only to plausible answers in a new context. But as long as the produced text convinces, it will also be used. What irony: the humanities, in the course of “digital humanities,” strive to move away from the intuition of the widely-read genius and towards statistical significance – and in the end, we are back to blind trust in the “black box” of AI.

Will there soon be AI-written doctoral theses? The AI’s lack of long-term memory complicates complex tasks. And the necessity for manual work in many research areas poses another obstacle. But speech acts go a long way! Soon, language models will independently be able to hire handwriting experts for the transcription of manuscripts.

If AI writes scientific papers, what place is there for human research? Christoph Markschies emphasizes precision as a human unique selling point. Yet, with some fine-tuning, large language models can also grasp the finest distinctions in academic discourse. Moreover, I wonder if society still understands the necessity of the distinctions we researchers find important when a click already produces texts that sound like hair-splitting to non-experts.

If research is primarily defined as the creation of scholarly books, we might maneuver ourselves into obsolescence. I see here several questions that need to be openly discussed: What are genuinely unique human qualities? How can research be seen as an interpersonal activity? How does it remain socially relevant?

TEACHING

The rapid advances in AI also pose significant questions for teaching. Core competencies of academic learning such as writing and reading are under AI assault. If one believes that these skills remain crucial qualifications, one should advocate assertively for their value. There are indeed cognitive scientific arguments – manual writing, for example, supports memory. Many students long for “Close Reading” as a counterbalance to the endless scrolling on their smartphones. Particularly in theology, an adjustment of the curriculum seems imperative to make room for profound reading and writing. At least one can no longer force students to engage with compulsory reading and homework. Of course, AI also offers great opportunities for teaching, such as interactive learning through specialized language models. For instance, one could train a language model on the writings of theologians like Karl Barth, so that students can pose questions to the AI-Barth and link his theology with current issues.

In an era where AI and the internet offer nearly unlimited knowledge, the argument for proficiency in religious information no longer suffices to justify a study in theology. Theology must reinvent itself and focus on what distinguishes us humans from AI – our bodily-mediated perception of the world. Even highly qualified professions such as lawyers and doctors are increasingly being replaced by AI. This could lead to more room for personal encounters. Similarly, the pastoral profession will have to adapt and focus on areas that are financially uninteresting for AI.

However, humility is also appropriate here. For within a few months, large language models have already developed eyes and ears. Thus, theology is challenged to engage self-critically in a reformation process that will be marked by ever-new challenges.

___

Christoph Heilig Dr. Christoph Heilig is the leader of a research group at LMU Munich that deals with narrative perspective in early Christian texts and pays special attention to the role of Large Language Models in Biblical Studies.

[1] The content of this column is based on a lecture I—Christoph Heilig—delivered on June 19, 2023, at the University of Leipzig. A recording can be viewed here: [https://www.youtube.com/watch?v=JRBeW2WR-rc]. The audio file, recorded with Zoom, was then transcribed using OpenAI’s Whisper (in the most powerful variant, “model large”). The transcript was turned into a readable continuous text by ChatGPT (version GPT-4), section by section, using the user-defined system settings. The resulting draft was revised in several runs, where I tried to automate the process as much as possible. Details concerning that process can be found in the first endnote of the German version of this essay: https://www.feinschwarz.net/digitale-reformation-wie-ki-die-theologie-transformiert/.

[2] My text-linguistic research can be accessed here: [https://www.degruyter.com/document/doi/10.1515/9783110670691/html]. The following project is dedicated to probability theory: [https://theologie.unibas.ch/en/departments/new-testament/bayes-and-bible/].

[3] Maron Bischoff provides a good overview of the development of neural networks and large language models in this article: [https://www.spektrum.de/news/wie-funktionieren-sprachmodelle-wie-chatgpt/2115924]

[4] Update: In November 2023, OpenAI released the model gpt-4-1106-preview, which can take into account 128.000 tokens, rather than the previous maximum of 32.000 tokens.

[5] This program was also used for the transcription behind this article: [https://openai.com/research/whisper].